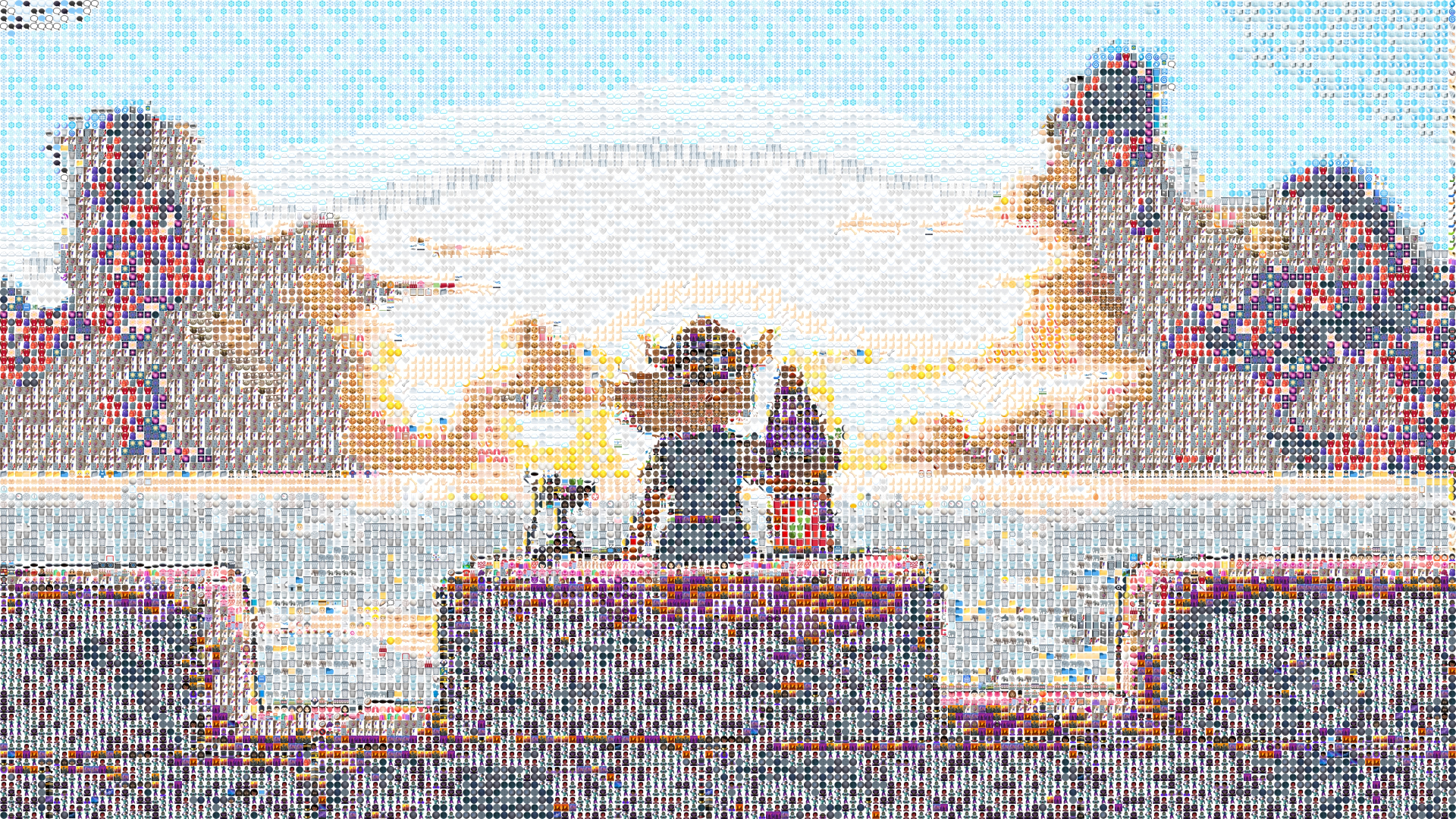

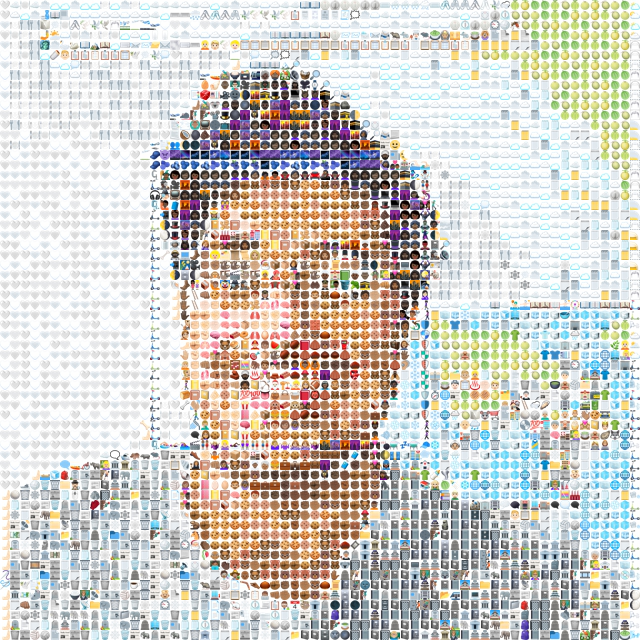

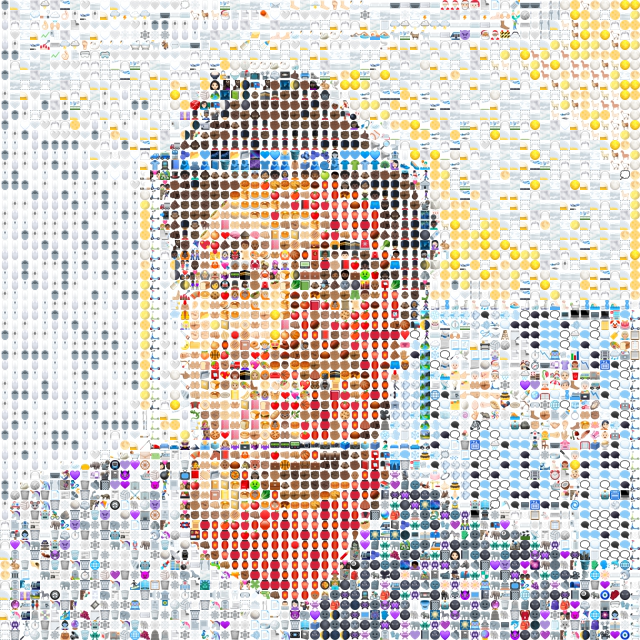

After seeing @bwasti’s creative image-to-emoji project, I created my own PyTorch implementation that transforms photos into mosaics made of 10x10 pixel emoji tiles.

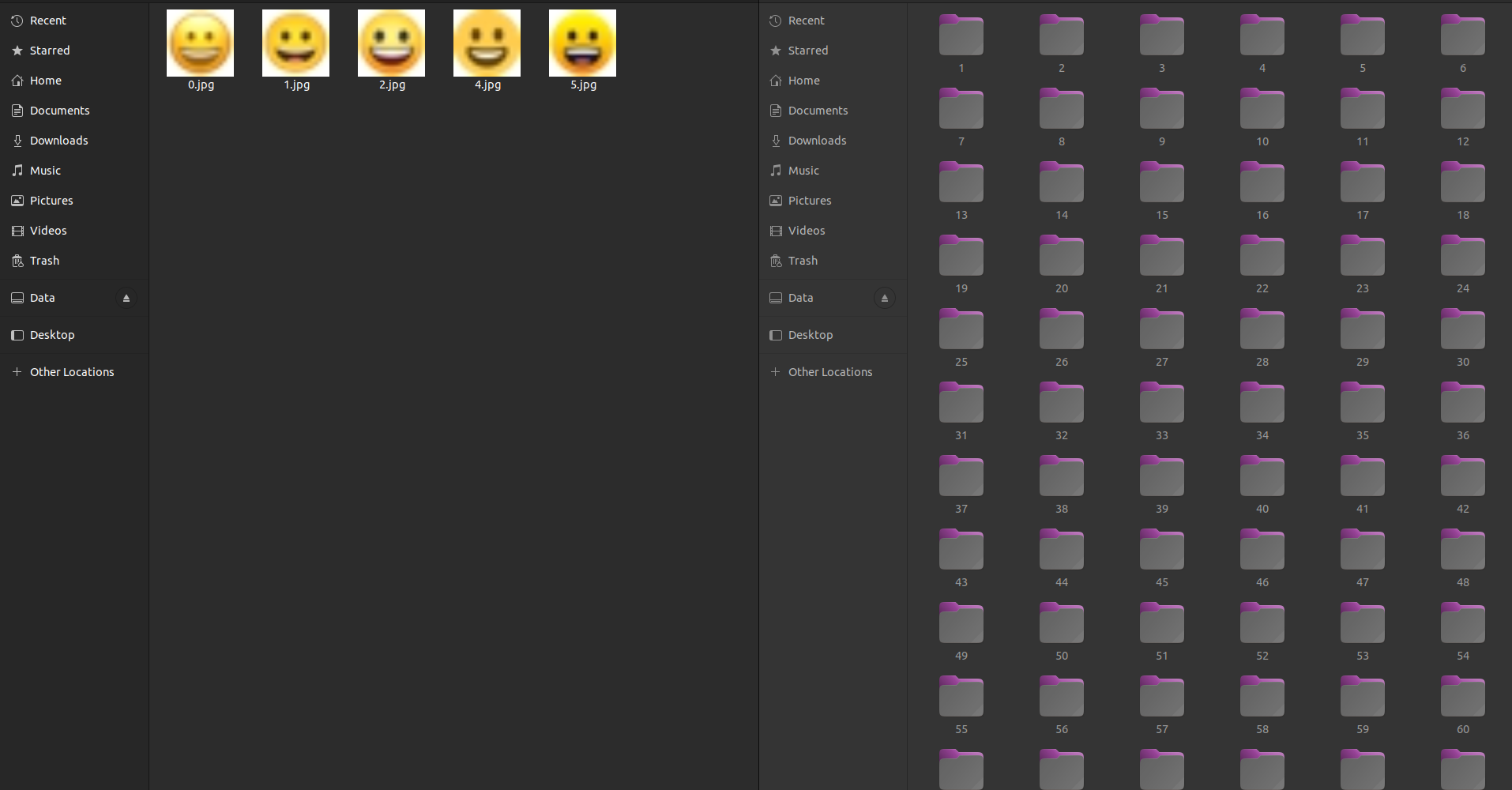

The project started by building a dataset from standard emoji images found at unicode.org, using their 14x14 pixel PNG versions.

To improve model training, I applied basic image augmentation with Gaussian blur and slight rotation to create more variation in the training data.

For the model, first, I overfitted torchvision.models.resnet18() and then this small model. Both worked fine, but I like the output of the resnet18 more.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

class EmojiNet(nn.Module):

def __init__(self, num_classes):

super(EmojiNet, self).__init__()

self.feature_extractor = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=8, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=8, out_channels=32, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2)

)

self.clasifier = nn.Sequential(

nn.Linear(in_features=32, out_features=256),

nn.ReLU(),

nn.Linear(in_features=256, out_features=num_classes),

)

def forward(self,x):

x = self.feature_extractor(x)

x = torch.flatten(x, 1)

x = self.clasifier(x)

return x

Resnet18 output:

EmojiNet output:

I need to work on improving its speed (I have a problem making predictions on a batch of inputs), but I’m super happy with the results. I’ll clean the code and release it sometime in the future and update this page :)